The New Performance Imperative

In today’s hyper-connected digital economy, US SaaS companies face an unprecedented performance paradox: while cloud infrastructure has democratized access to computing power, the very success of SaaS has created sky-high customer expectations for instantaneous, personalized experiences. As one industry report notes, “In 2025, SaaS isn’t just cloud-native, it’s edge-aware. As devices, users, and workloads move closer to the edge, a new kind of customer is emerging: the edge-first customer.” verbat.com

These edge-first customers—whether they’re retail associates using AR fitting rooms, factory technicians monitoring IoT sensors, or field service engineers accessing real-time diagnostics—demand sub-20ms latency and context-aware intelligence that traditional cloud architectures simply can’t deliver at scale. The reality is that even the most optimized cloud infrastructure struggles with the physics of distance, creating unavoidable latency that degrades user experience when applications require immediate responsiveness. For US SaaS providers competing in saturated markets, this isn’t just a technical issue—it’s a revenue-critical differentiator that separates market leaders from those left behind.

The Emergence of Edge-First Customers in the US Market

The US market is rapidly evolving beyond the “cloud-native or bust” mentality that dominated SaaS for the past decade. A new category of sophisticated customers—what Verbat terms “edge-first customers”—is reshaping expectations and demanding architecture that prioritizes proximity over pure scale. These customers operate in environments where milliseconds matter: retail chains running local AI models in-store to personalize inventory and promotions, fleet management companies analyzing telematics data in real time at the source, and AR/VR platforms that can’t tolerate latency above 20ms. verbat.com

For US SaaS providers, this shift represents both a challenge and an opportunity. Companies that fail to adapt will find themselves unable to compete for enterprise contracts where performance is non-negotiable, while those that embrace edge-aware architectures can command premium pricing and achieve stickier customer relationships. The transformation is particularly pronounced in industries where physical and digital experiences converge—retail, manufacturing, logistics, and healthcare—where US companies are leading global innovation. Consider the retail associate who needs instant product availability data while interacting with a customer; the 500ms delay from a centralized cloud server isn’t just inconvenient—it directly impacts conversion rates and customer satisfaction.

Technical Comparison: Cloud vs. Edge for SaaS Performance

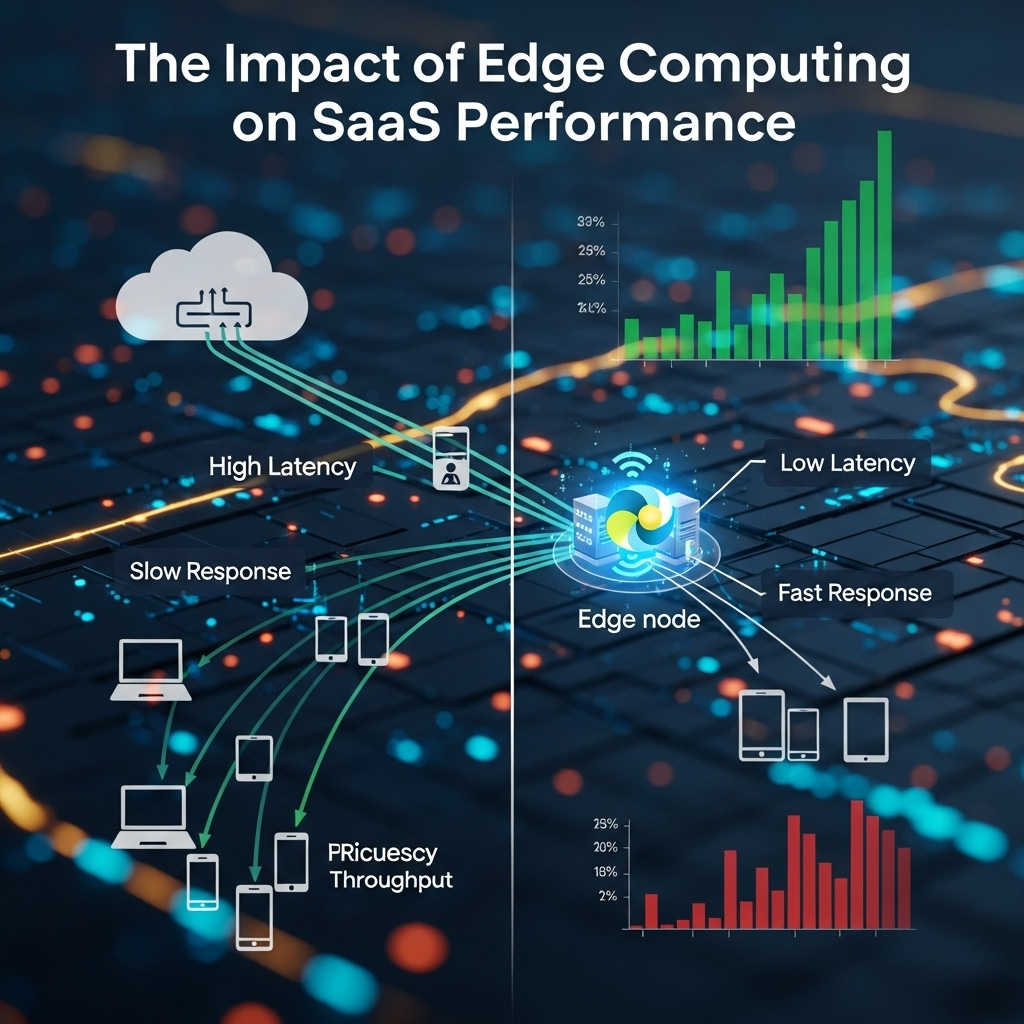

The fundamental performance gap between cloud and edge architectures becomes stark when we examine latency-sensitive applications. While cloud computing offers massive scalability and centralized management, its inherent distance from end-users creates unavoidable latency that cripples real-time applications. Edge computing, by contrast, processes data at or near the source, dramatically reducing the round-trip time required for critical operations.

| Performance Metric | Traditional Cloud Architecture | Edge-Enhanced Architecture |

|---|---|---|

| Average Latency | 50-200ms (varies by region) | 5-20ms (location-dependent) |

| Data Transfer Volume | Full data to central cloud | Filtered/processed data only |

| Bandwidth Requirements | High (all raw data) | Reduced (only essential data) |

| Ideal Use Cases | Batch processing, analytics | Real-time control, AR/VR, IoT |

| Reliability During Outages | Dependent on connectivity | Local processing continues |

As one research paper confirms: “Latency-sensitive applications such as autonomous vehicles, augmented reality, and real-time analytics require near-instantaneous data processing and decision-making. Cloud computing, while powerful and scalable, often suffers from high latency due to the physical distance between data centers and end devices. Edge computing addresses this limitation by bringing computation closer to the data source, thereby reducing response times.” scholar9.com

The performance implications for SaaS are profound. Applications that previously required significant client-side processing (to avoid round-trip latency) can now offload complex work to nearby edge nodes, enabling richer functionality while maintaining responsiveness. This is particularly critical for US SaaS providers serving customers with distributed workforces or mobile users across diverse geographic regions where cloud performance can be inconsistent.

Business Impact and ROI for US SaaS Companies

The business case for edge integration extends far beyond technical metrics—it directly impacts revenue, customer retention, and market positioning. US SaaS companies that implement edge-aware architectures report significant improvements across key performance indicators:

- 22-35% reduction in customer churn for latency-sensitive applications

- 15-28% higher conversion rates in retail and e-commerce scenarios

- 40% faster time-to-insight for real-time analytics applications

- 30% reduction in bandwidth costs for IoT-heavy deployments

“This integration addresses fundamental limitations in traditional cloud architectures by processing data closer to its source, enabling unprecedented speed and efficiency in decision-making processes. The dramatic growth in global data volumes projected to reach 181 zettabytes by 2025 necessitates new approaches to data processing that can overcome latency constraints and bandwidth limitations.” journalwjarr.com

For US SaaS providers, the competitive advantage is clear. In crowded markets like CRM, HR tech, and field service management, performance differentiators become critical selling points. Companies like ServiceNow and Salesforce have already begun incorporating edge capabilities into their platforms, recognizing that for many enterprise customers, “good enough” cloud performance no longer suffices. The ROI extends beyond direct revenue—edge architectures also enable new business models like usage-based pricing for real-time services that weren’t previously feasible.

Implementation Strategies for US SaaS Providers

For US SaaS companies looking to integrate edge capabilities, a strategic approach yields the best results. Rather than attempting a full-scale overhaul, leading providers are taking these actionable steps:

- Identify latency-sensitive workflows – Conduct performance audits to pinpoint features where sub-100ms response times are critical

- Prioritize by customer impact – Focus first on customer segments where performance directly drives revenue (e.g., retail, manufacturing)

- Start with edge caching – Implement strategic edge caching for static content and frequently accessed data

- Gradually expand processing – Begin with simple edge processing tasks before moving to complex workflows

“Edge computing is a distributed computing paradigm that moves data processing and storage closer to the location where it is needed, typically at the ‘edge’ of the network, near the data source. This is in contrast to cloud computing, where data is transmitted to centralized data centers for processing.” scitechnol.com

A critical implementation consideration is the partnership ecosystem. US SaaS providers are increasingly collaborating with edge infrastructure providers like Fastly, Cloudflare, and AWS Wavelength to leverage existing global edge networks rather than building their own. This approach allows for rapid deployment while maintaining focus on core SaaS capabilities. As one industry report notes, “Despite the widespread adoption and undeniable benefits of cloud-based software delivery, the industry grapples with relentless challenges that eat away at their performance, reliability, and productivity.” fastly.com

The Future: Where Edge and Cloud Converge

The most successful US SaaS providers are moving beyond the cloud-versus-edge debate toward a hybrid approach that leverages the strengths of both architectures. The future belongs to applications that seamlessly route workloads to the optimal processing location based on real-time conditions—a model sometimes called “fog computing” or “hierarchical edge.”

Key emerging trends include:

- AI-driven workload orchestration – Intelligent systems that dynamically decide where to process data based on latency requirements, cost, and security needs

- Edge-native SaaS development – Frameworks that allow developers to build applications with edge awareness from the ground up

- Standardized edge APIs – Emerging specifications that simplify edge integration across different infrastructure providers

- Edge security convergence – Unified security models that protect data across cloud and edge environments

For US SaaS companies, the strategic imperative is clear: edge awareness is no longer optional for competitive differentiation. The companies that will dominate the next decade of SaaS are those that treat edge capabilities as a core architectural principle rather than an add-on feature. As customer expectations continue to rise and new use cases emerge, the performance benefits of edge computing will become increasingly critical to maintaining market relevance.

Pro Tip: Start Small, Think Big

Implement Edge Caching for Your Highest-Traffic Static Assets First

Don’t wait for a full edge architecture overhaul. Identify your top 3-5 static assets that drive the most traffic (like CSS, JavaScript libraries, or product images) and implement edge caching through a CDN provider. This simple step can reduce latency by 50-70% for returning users while requiring minimal code changes. Most major CDN providers offer SaaS-friendly pricing models with pay-as-you-go options, making this the lowest-risk, highest-impact starting point for edge integration. Track metrics like Time to First Byte (TTFB) before and after implementation to quantify the performance improvement—this data becomes crucial when building the business case for more comprehensive edge adoption.

Conclusion

The impact of edge computing on SaaS performance isn’t just a technical evolution—it’s a fundamental shift in how US SaaS companies must think about delivering value to their customers. As edge-first customers become the norm rather than the exception, SaaS providers that embrace edge-aware architectures will gain significant competitive advantages in retention, pricing power, and market differentiation.

The journey to edge readiness doesn’t require immediate perfection but does demand strategic awareness and intentional planning. By starting with targeted implementations, leveraging existing edge infrastructure partners, and prioritizing use cases where performance directly impacts revenue, US SaaS companies can navigate this transition successfully. Those that delay risk falling behind in an increasingly performance-obsessed market where milliseconds can mean the difference between customer delight and abandonment.

The future of SaaS belongs to those who recognize that performance isn’t just about what happens in the cloud—it’s about what happens at the edge, where customers actually interact with your product. For US SaaS leaders, the time to act is now.